Prerequisitos

Tener configurado la paquetería de Spark para IntelliJ IDEA

Incluir en el fichero pon la paquetería propia de elastic:

<!-- https://mvnrepository.com/artifact/org.elasticsearch/elasticsearch-spark-20 --> <dependency> <groupId>org.elasticsearch</groupId> <artifactId>elasticsearch-spark-20_2.11</artifactId> <version>6.4.2</version> </dependency>

Ejemplo de como escribir en un Elastic 6.4.2 local en Scala 2.1.1.

package com.scala

import org.apache.spark.{SparkConf, SparkContext}

import org.elasticsearch.spark._

object App {

def main(args : Array[String]) {

val conf = new SparkConf()

.setAppName("App")

.setMaster("local")

.set("es.nodes", "localhost")

.set("es.port", "9200")

.set("es.scheme", "http")

val m1 = Map("grupo" -> "A", "timestamp" -> 1540550120, "duration" -> 6)

val m2 = Map("grupo" -> "B", "timestamp" -> 1540550130, "duration" -> 10)

val m3 = Map("grupo" -> "A", "timestamp" -> 1540550140, "duration" -> 13)

val m4 = Map("grupo" -> "B", "timestamp" -> 1540550150, "duration" -> 18)

val m5 = Map("grupo" -> "C", "timestamp" -> 1540550160, "duration" -> 6)

val m6 = Map("grupo" -> "B", "timestamp" -> 1540550170, "duration" -> 10)

val m7 = Map("grupo" -> "A", "timestamp" -> 1540550180, "duration" -> 13)

val m8 = Map("grupo" -> "C", "timestamp" -> 1540550190, "duration" -> 18)

val sc = new SparkContext(conf)

sc.makeRDD(Seq(m1,m2,m3,m4,m5,m6,m7,m8)).saveToEs("elastic_list/docs")

}

}

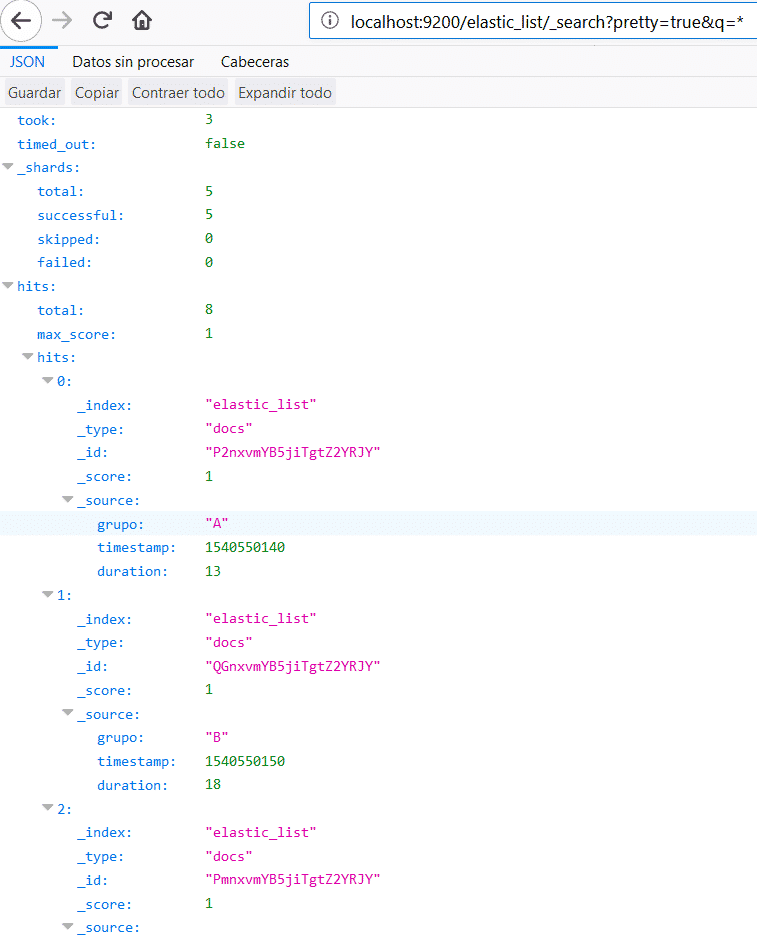

Comprobar datos escritos en Elastic

Mostrar todos los indices almacenados en Elastic

Linea de comandos:

curl --insecure 'http://localhost:9200/_cat/indices?v'

Navegador Web:

http://localhost:9200/_cat/indices?v

Mostrar datos del índice «elastic_list» en concreto

Linea de comandos:

curl --insecure -H 'Content-Type: application/json' -X GET 'http://localhost:9200/elastic_list/_search?pretty=true&q=*'

Navegador Web:

http://localhost:9200/elastic_list/_search?pretty=true&q=*

0 comentarios